Deploy Satori Customer Hosted on Azure

The following section describes the main components of the Satori Customer Hosted (CH) Platform and how to deploy the solution on Azure.

Learn more about the Satori Data Platform and Schedule a demo meeting

Introduction to Satori CH for Azure

The Satori CH platform consists of two main components:

- The Satori Cyber Application service

- The Satori CH Data Access Controller (DAC) - a Kubernetes container that is either consumed as a service or deployed on an Azure Kubernetes Service (AKS) inside the customer VNet.

Deploying the Satori CH DAC

Deploy the Satori CH DAC in the same Azure region as the data stores the DAC is meant to protect.

For example, customers using SQL Server on Azure East US 2 should deploy the Satori CH DAC in a VNet in the same region.

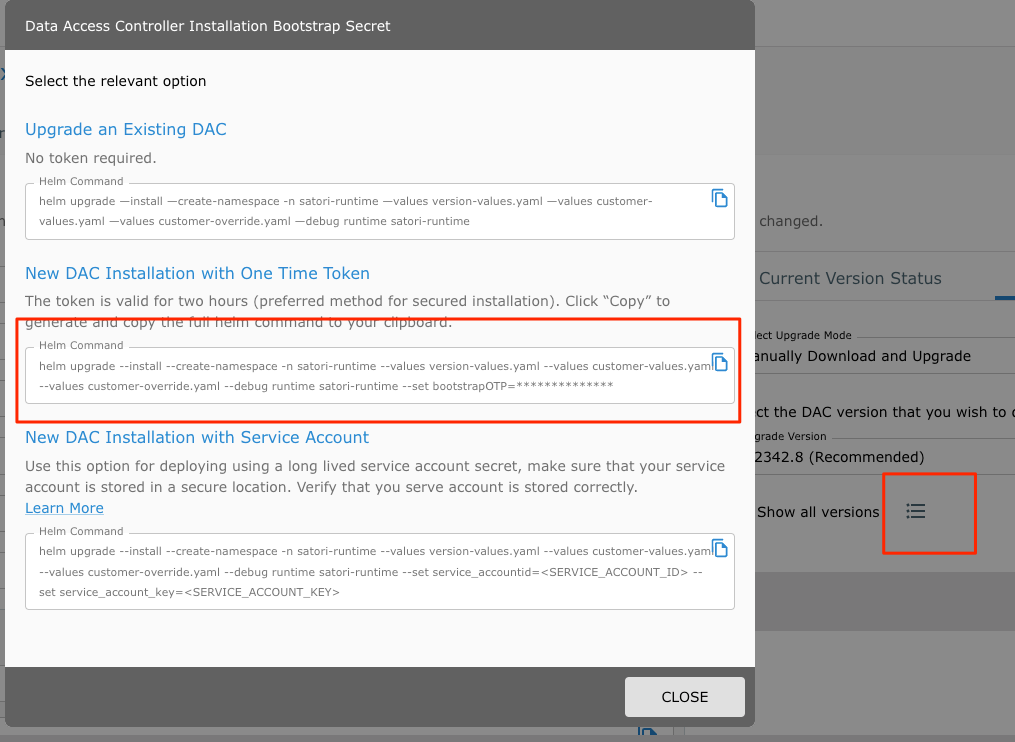

Illustration 1 - High Level Satori Deployment on Azure Architecture

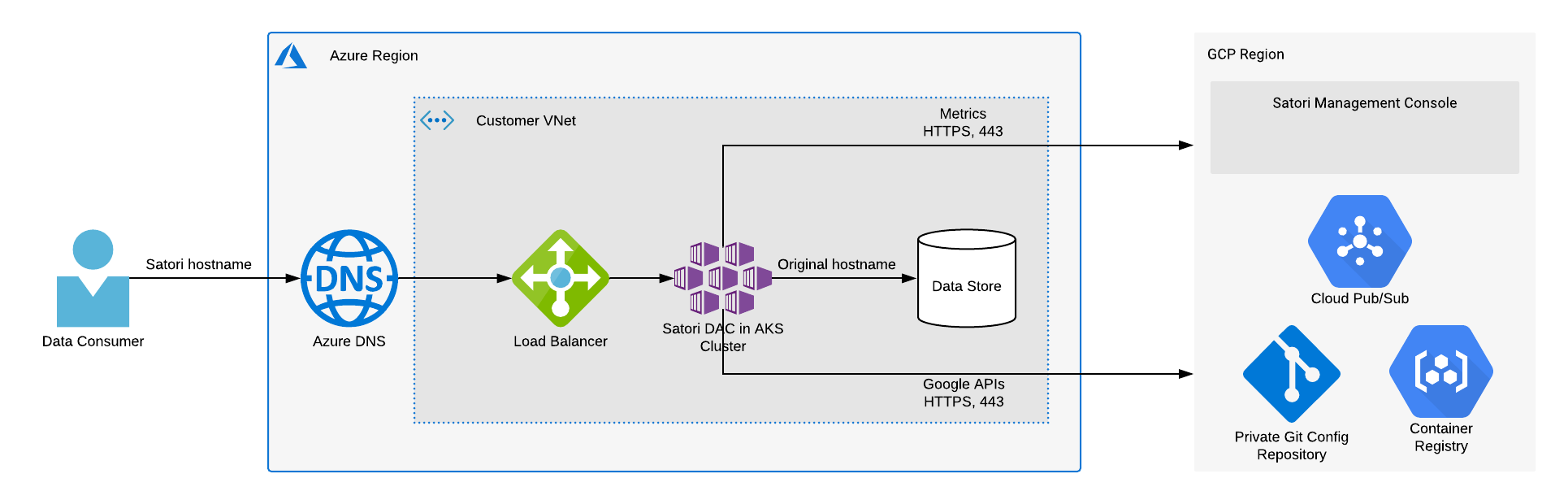

Illustration 2 - Kubernetes Cluster Architecture

The Satori CH DAC is accessed by the user, by using a dedicated hostname for each data store that the Satori CH DAC protects.

For example, to access a SQL Server database hosted in Azure East US at acme1.database.windows.net, data consumers will instead connect to acme1.eastus.z.p1.satoricyber.net..

The new hostname resolves to an internal-facing load-balancer that routes traffic to the Satori DAC inside the AKS cluster.

Satori CH DAC Network Configuration

The Satori CH DAC requires the following network path configurations:

- User Connection to the Satori DAC - Users connect to data stores via the Satori DAC, therefore a network path from users to the Satori DAC is required.

- Satori DAC Connection to the Data Store - the Satori DAC receives queries from users and then sends them to the data stores it protects, so a network path from the Satori DAC to the data stores is required. Typically, this is established by deploying the Satori DAC in the same VNet as the data stores it protects and ensuring that the AWS security groups allow access from the Satori DAC to the data stores.

- Set the HTTPS port 443 to app.satoricyber.com, .google.com, .googleapis.com and us-docker.pkg.dev - Satori uses several services from the Google cloud platform (GCP) as well as a GIT repository that contains the Satori DAC's configuration files, a secret manager for secure storage of secrets and a messaging service to publish data access metadata that is shared with the management console. The full list of fields that are sent is available here: Metadata Shared by Data Access Controllers with the Management Console.

- Set the HTTPS to port 443 to cortex.satoricyber.net, alert1.satoricyber.net, alert2.satoricyber.net, alert3.satoricyber.net - the product telemetry (metrics) are uploaded here.

Private or Public Facing Data Access Controller

You can choose to deploy a private, VNet-only facing Satori CH DAC, or a public, internet-facing Satori CH DAC.

Azure Internal Load Balancer

For customers who want to define an internal load balancer and require the load balancer to be placed in a pre-defined subnet, open a support ticket with the Satori support team to update the deployment package with the name of the subnet.

Please note that in certain cases, the cluster's service principal might not have the required permissions to create a load balancer in the specified subnet. To grant it the necessary permissions, perform the follow steps prior to deployment:

az aks show --resource-group <RG_NAME> --name <CLUSTER_NAME> --query servicePrincipalProfile.clientId

az ad sp show --id <CLUSTER_SP_CLIENT_ID> --query objectId

az role assignment create --role "Contributor" --resource-group <RG_NAME> --assignee-object-id <CLUSTER_SP_OBJECT_ID>

For more information, see the Azure documentation.

Load-balancer stuck in "pending" state - Customers that use the Azure CNI networking option with the "System-assigned managed identity" authentication mode may encounter this issue.

To overcome this, please make sure the cluster is created with authentication mode "Service principal" For more information see the Internal Load Balancer using Azure Stuck on Pending

The following section describes how to deploy a typical AKS cluster.

Prerequisites

To deploy an AKS cluster, ensure the following access privileges and third party products are installed and made available:

- Administrator Level Access to the Following AKS Services - IAM, VPC, NAT gateway, Network Load Balancer, Vault, AKS.

- Helm 3 is installed on the Command Line - To verify helm is installed run the following command:

helm version. To download helm go Helm. - kubectl is installed on the Command Line - To verify kubectl is installed run the following command:

kubectl version. To download kubectl go to Kubernetes Tool Installations.

Recommended Cluster Specification

- Creating Nodes - 3 Standard_D2s_v5 or similar nodes. Satori recommends that you create three node pools. Since Satori workloads use persistent volumes, we strongly recommend to create a single node pool in each availability zone. See here why this is important.

- Subnet Configuration - 3 subnets, one in each availability zone with NAT gateway deployed or any similar solution for the outgoing connectivity to the Internet.

- Cluster Scaling - Each node pool shoe enable the autostaling feature with minimum 1 node and maximum 3 ( should be sufficient for most workloads).

Satori CH Hostname DNS

Satori CH generates a unique hostname for each data store that it protects in a DNS zone that is unique for each Satori CH DAC. For private facing Satori CH DACs, customers should host the DNS zone on a DNS service the same as the Azure DNS.

For public facing Satori CH DACs, Satori provides the option to use Google DNS, at no extra cost.

The DNS zone would have a root hostname pointing to the load balancer, and a wildcard entry for the data store specific hostnames. For example:

$ORIGIN acme1.eastus.z.p1.satoricyber.net.

*.acme1.eastus.z.p1.satoricyber.net. A 20.14.113.2.

Virtual Machine Configurations

A minimum of 2 DS2 v2 VMs are required by the Satori CH DAC, with each VM processing up to 20MB/s of data traffic. For most deployments that is sufficient, however, additional VMs should be added if higher traffic loads are expected. The DAC is horizontally scalable and will automatically request more resources if it needs to.

Deployment Client

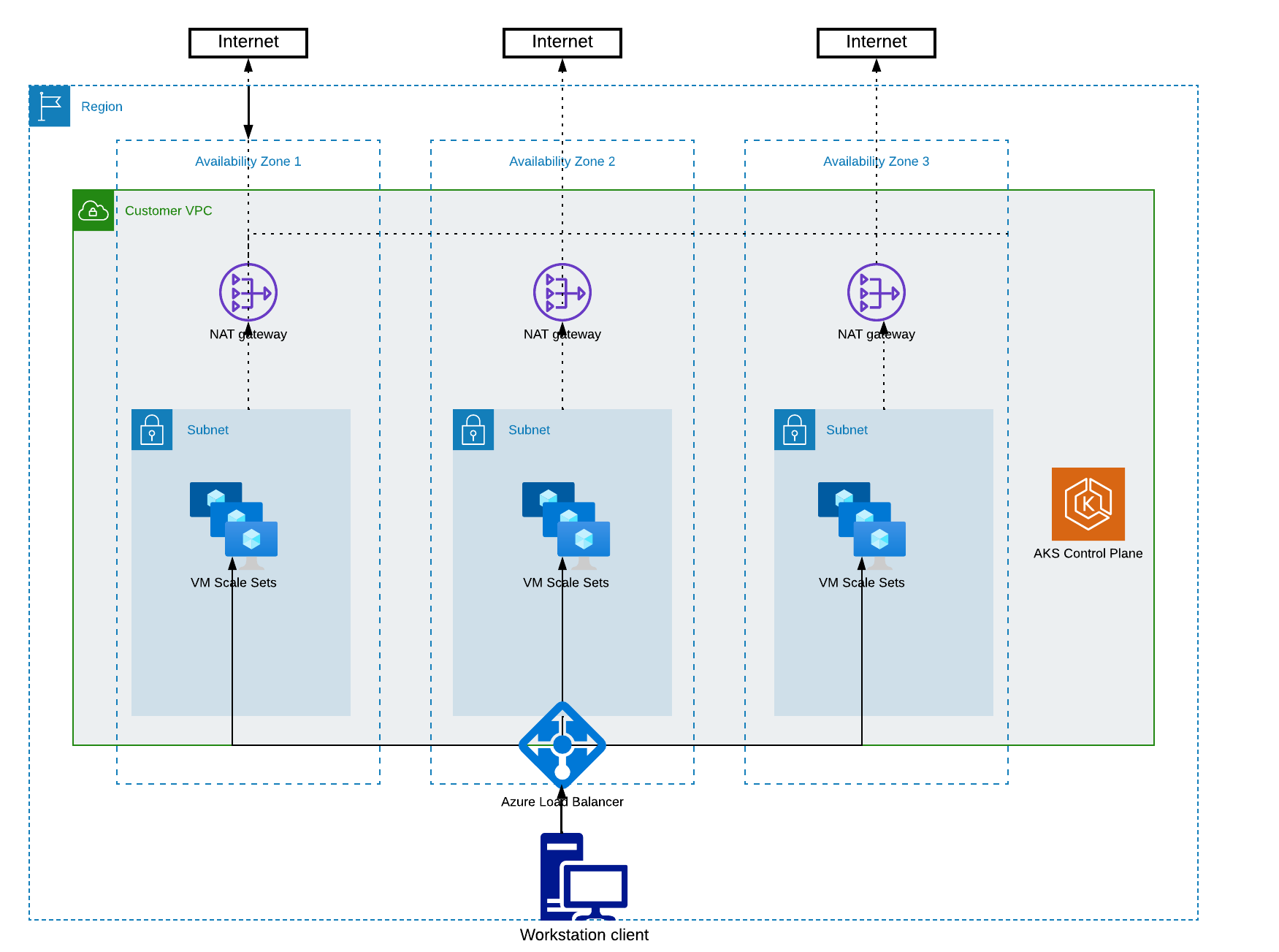

Satori provides a Helm 3 command to deploy the Satori CH DAC on the Kubernetes cluster.

Perform the following steps to extract and deploy the Satori DAC upgrade

-

Login to the Satori management console at Satori Management Console.

-

Go to Settings, Data Access Controllers and select the DAC to deploy to. Please contact Support if a new DAC needs to be created.

- Select the Upgrade Settings tab and download the recommended deployment package.

- Extract the deployment package and open a terminal window to the directory where the deployment package was extracted. For example:

tar -xf satori-dac-1.2405.2.tar

cd satori-2405

- Run the command (this command is required only once during the first installation):

kubectl apply -f runtime-prometheus-server.yaml

cd satori-runtime

helm upgrade --install --create-namespace -n satori-runtime --values version-values.yaml --values customer-values.yaml --values customer-override.yaml --set bootstrapOTP=

The one-time password is valid for one hour and can be used only one time.

Upgrading Satori CH DAC

To upgrade your Satori CH DAC and enjoy the latest features, fixes and security improvements go to the Upgrading Satori section.